Marketing Smart with Einstein Analytics – Part 4

If you have been following along in this series of ‘Marketing Smart with Einstein Analytics’ you will have seen how you can set up the connection from Marketing Cloud to Einstein Analytics and further how we can access the hidden data extensions. Now if you sent a lot of emails you will have a lot of tracking data which means queries can be rather slow to run, so let’s have a look at how you can deal with vasts amount of data.

Replicating the Hidden Data Extensions

In part 2 of this blog series, we covered how the first step in accessing the tracking data via the connector is to create a copy of the hidden data extensions or at least those that we are interested in using in Einstein Analytics.

For us to create new data extensions we need to go to the email studio (yes, you can also do this in the contact builder if you rather do that). In Marketing Cloud hover over ‘Email Studio’ and choose ‘Email’.

Now navigate to data extensions by choosing ‘Data Extension’ in the Subscriber tab.

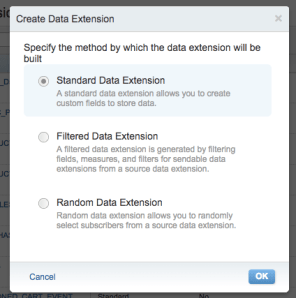

Before we move on and create the data extensions we need please make sure you have the Help Section open with the data extension you want to use. In this case, I am looking at recreating the Open data extension. Now we have an idea of how our new data extension should look. Click on the blue ‘Create’ button, choose the ‘Standard Data Extension’ and click ‘Ok’.

Give the new data extension a name and click ‘Next’.

In the next section, we are going to just click ‘Next’ since we don’t care about retention.

Now the interesting part comes, you need to create every single field from the hidden Open Data Extension in this new data extension. Make sure that the name, data type, length and nullable is an exact match from the data view. Once you are done you can click ‘Create’.

Extracting That Data

Once we have the data extension created we are ready to populate it. When we are dealing with vasts amount of data we will use a different way to extract this data than we did it in part 2. For this setup, you want to make sure you have created your Marketing Cloud FTP account. Another thing is to have a CSV file with the columns from the data extension ready.

Now let’s move to Automation Studio by navigating to the ‘Journey Builder’ tab in the menu and choose ‘Automation Studio. Note that the individual steps we will be creating can also be done from email studio if you prefer that.

Once in the Automation Studio, we need to create a new automation by clicking the blue button in the top right corner saying ‘New Automation’.

Best practice is always to give your automation a name, which you can do in the top left corner where it says ‘Untitled’. Once you have given it a name just remember to click the ‘Done’ button.

The first thing we want to do hereafter is to define how our automation will start and since I want this to happen on a schedule I will choose and drag the starting source ‘Schedule’ into the starting source part of the canvas.

Next, we need to have three activities on our canvas in order for us to grab data from the hidden open data extension to the new data extension created as a replica. The first activity we will perform is a ‘Data Extract’, so find it to the left under ‘Activities’ and drag it onto the canvas on the right side.

Once we have this activity click on ‘Choose’ to setup a new data extract, which will open up a new dialog box where you can choose to create a new data extract activity.

Make sure to give your data extract activity a name and a file pattern name. The latter will determine the name of the file that is dropped on your ftp account. Finally, you want to make sure that the extract type is ‘Tracking Extract’. Once you have that click ‘Next’.

On the next screen, there is quite a bit to consider. The first thing is the range of your data extract, in my case I have just chosen to do a rolling range of 90 days. Account ID refers to the business unit (MID) you want to pull data from. The easiest way to get that ID is by hovering over the business unit name left to your own name and then again hovering over the business unit of your choice.

The last thing is to define the extract you want, which in my case is ‘Extract Open’. Once selected you can click ‘Next’ and ‘Finish’ once you get the summary screen.

The next activity we need to create is a ‘File Transfer’, which helps to take the file we just created, so go ahead and drag that activity over to the canvas as a second step.

We now need to choose and create a new file transfer activity, so click ‘Choose’ to open up the dialog box and click the ‘Create New File Transfer Activity’ in the top right corner of the box – just like we did with the data extract.

Again we must give our activity a name and we also need to choose ‘Move a File From Safehouse’ as the action type. Then click ‘Next’.

In the new screen we need to first set the file naming pattern for the file we need to pick up, which defined in the data extract before. In my case I just called it ‘Open’. We also need to pick the destination for the transfer which will be our ftp account. We are now ready to click ‘Next’ and ‘Finish’.

The last activity we need is the ‘Import File’, so find it and drag it over as a third step.

Again we need to choose and create a new activity just like we did with the previous two activities. Similar to previous activities we also need to give this one a name and click ‘Next’.

Now choose the destination that you picked in the file transfer activity and again put the name of the file naming pattern you used previously. You will get a warning here as we have not yet run the two previous steps, so no file exists just yet, but go ahead and click ‘Next’.

Now you need to pick the data extension you want to populate, which would be the one we created earlier and click ‘Next’.

We now have to define what type of data import we want to do. Depending on how you set up your automation you may change it, but for the illustration of what is possible then I will just do an ‘Overwrite’. Next, we need to map the columns, which we will do with the map manually. Once that has been selected you will see the dialog box changing a bit and we now need to import that CSV file I said was a prerequisite so we can map the columns. Once you have your file uploaded you can start mapping the columns by dragging column headers from the left to the matching on the right. Once done click ‘Next’ and ‘Finish’.

Your automation should now have three steps and looks somewhat like mine in the picture below.

Now you can save your automation and afterward run it once by clicking the two buttons in the top right corner.

Once you hit ‘Run Once’ you do need to pick which activities you want to run, you want to make sure all of them work together, so select them all and click ‘Run’.

If you are successful in the run you can now go ahead and schedule your automation and everytime your automation run you will have fresh new tracking data to grab from the Marketing Cloud connector in Einstein Analytics. Please have a look at part 1 of this blog series to see how you sync the data extension in Einstein Analytics.

Another great, useful blog Rikke. Could you help qualify when to use Einstein Analytics and when to use the new acquisition, Datorama, for MC analytics?

Thanks!

Tbh I am not too sure. I haven’t looked at Datorama in depth. However, from my quick look, it seems they have connectors to more marketing solutions like Social Studio where EA at this point only have Marketing Cloud as a connector – of course there is also the B2B app for Pardot. Also remember that there are companies that use Marketing Cloud but not Salesforce and since Einstein Analytics do need a Salesforce license EA is probably not always in scope.